The prevalence of AI-generated summaries within search engine results has increased dramatically over the past two years. An ongoing weekly study by Advanced Web Ranking showed that as of January 5th, 2026, Google’s search engine produced an AI Overview on 60.2% of ,[1] compared to only 12.4% as of July 2024.[2]

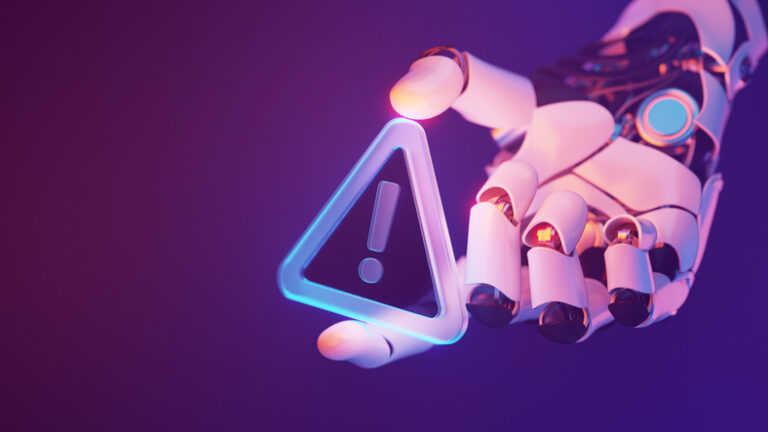

But the rise in AI summaries does not necessarily coincide with an increase in the accuracy of the underlying large language models (LLMs). According to some sources, the latest models actually show an increase in “hallucinations,” a phenomenon in which the LLM inserts fabricated information into a response.[3] Estimates for the frequency of these errors vary by model and query type. One ongoing study that looks at Gen AI’s ability to summarize an article found hallucination rates between 1.8% and 7.8% among the top Gen AI models.[4] Even Google’s Gemini model, when asked how trustworthy AI Summaries are, provided this reply: “Recent tests in 2025 indicated that roughly one in five AI Overviews may return inaccurate or misleading answers… Accuracy drops significantly for specific, less-documented topics where the AI may fill “data voids” with off-base information.”[5]

What’s troubling is not only the tendency of AI-generated information to be wrong, but its tendency to be “confidently wrong.” A study conducted by the Columbia Journalism Review[6] in March 2025 found that eight leading Gen AI tools had a collective error rate of 60% when providing citation information that could have otherwise been easily found by clicking through the first few search engine results. The incorrect responses were often presented with complete confidence and no qualifying statements or expressions of uncertainty. While the tools self-filtered by declining to provide responses in some cases, this behavior differed across the tools. ChatGPT, for example, provided a response to every query despite a high error rate in its responses. In contrast, Microsoft Copilot declined to reply for over half the questions.

What This Means for Actuaries:

Even though LLMs have not yet entered the mainstream of actuarial practice, they may be creeping into the periphery of actuarial work through the use of internet search engines for information retrieval.

Consider just a few possible ways that actuaries may rely on search engines to directly inform their work:

- Searching for laws / regulations / ASOPs related to insurance pricing or reserving.

- Searching for information on actuarial methodologies or exam syllabi topics.

- Searching for information related to emerging sources of risk for purposes of risk classification or for assessing the possibility of adverse deviation of reserves.

Many of these use cases are related to niche, less-documented topics — the sorts of topics that Gen AI confesses itself to be particularly error-prone on.

As a result, actuaries should be particularly careful when using AI summaries from search engines. While it can be tempting to rely on an authoritative-sounding AI summary instead of clicking into search results to read the details of a primary source (e.g. DOI bulletin, statutory law, ASOP, CAS paper, etc.), these AI summaries should at most be used as a starting point, not a final destination, for information. Would any actuary feel comfortable using Microsoft Excel if they knew that 1% of the time, Excel would fabricate a result instead of correctly executing a formula?

[1] https://www.advancedwebranking.com/free-seo-tools/google-ai-overview#faq

[2] https://www.advancedwebranking.com/blog/ai-overview-study

[3] https://www.nytimes.com/2025/05/05/technology/ai-hallucinations-chatgpt-google.html

[4] https://github.com/vectara/hallucination-leaderboard

[5] Ironically, Gemini did not cite the source of the test results.

[6] https://www.cjr.org/tow_center/we-compared-eight-ai-search-engines-theyre-all-bad-at-citing-news.php