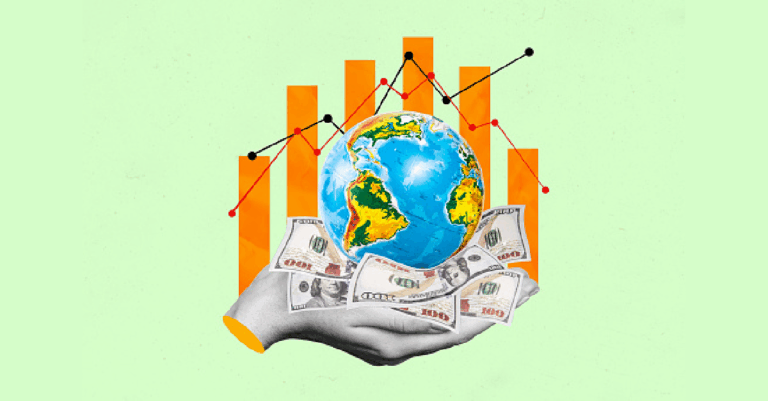

The Earth’s temperature is rising. In 2024, the Earth’s temperature increased 1.5° Celsius above pre-industrial levels for the first time in history. We continue to see record-breaking insurance losses globally due to increased weather-related damage. Ben Williams and Howard Kunst, presenters of the “Natural Catastrophe Insights and Outlook” RPM session, emphasize how the insurance industry has not kept up with the rapid evolution of climate-related risks. Climate change, of course, is a widespread issue affecting all continents around the world. Therefore, we should acknowledge that non-U.S. insurance markets, which are generally less robust and mature, are consequently at higher risk of a larger insurance protection gap.

To complicate matters further, there is a great deal of political turbulence around the topic of climate change. Kunst believes the actuarial catastrophe modeling community is somewhat shielded from the ever-changing politics around climate change. Upholding our data-driven integrity as actuarial professionals, changing political opinion should not have any impact on our modeling strategy or research. Nonetheless, we should certainly acknowledge that public policy and civic opinion on the topic can play a role in how our actuarial models and predictions are perceived. Kunst meets regularly with legislators on Capitol Hill and federal agencies including Freddie Mac, Fannie Mae and various mortgage lenders. These agencies are concerned with the potential scenario where a major weather event leaves the majority of U.S. citizens underinsured and unable to meet their financial obligations. Consequently, mortgage lenders will be on the hook for the unpaid debts on properties, and perhaps the federal government will need to step in to help.

As you can see, catastrophe modeling inclusive of climate change is extremely important in protecting people, their properties and our economy overall. Catastrophe modeling started out in a simpler era when companies modeled on observed losses and multiplied by a constant selected trend factor. As catastrophe risk has grown, companies now need more granular and sophisticated modeling approaches. Today, we see that many companies have progressed to modeling each peril separately. Williams notes that an intersection of catastrophe and capital modeling is a very sophisticated solution to proper exposure management. A further enhancement could be to consider allocating reinsurance costs to the areas most prone to incurring reinsurance costs from modeled catastrophe events thus allowing for deeper price segmentation and fairness.

There is a slew of catastrophe models available in the marketplace from a variety of agencies, and many insurers choose to purchase multiple forecasts from various agencies. The problem is that certain peril forecasts (e.g., hurricane) from various agencies are highly correlated, which begs the question: Are all these various forecasts adding value since they are all equally right and wrong? Probably not. It may be a better investment to focus on newer perils that vary more widely across the marketplace, such as wildfire or severe convective storm. Kunst believes that wildfire is the most difficult and least mature peril for the industry right now. The recent Los Angeles wildfires provide evidence that we’re seeing more drought, higher winds and consequently more fires across the Western U.S. The Severe Convective Storm (SCS) category, which includes tornado, hail and straight-line winds, has also surged in recent years to become a focus area for the modeling community.

Catastrophe models are most effective when their assumptions and data are refreshed regularly. Kunst notes that for every degree the temperature increases, the atmosphere can hold ~7% more moisture, which in turn produces more rain and flooding. We should ensure these peril models are regularly updated to reflect these changing assumptions. Property reconstruction costs are another critical assumption used in the models to better understand the financial impact of weather events. Aerial imagery, geocoding and other AI tools are becoming essential in tailoring models specifically to a region, neighborhood and home. For example, first floor height and ground elevation of a home are very predictive of the flood risk. This is the level of data granularity that is becoming more commonplace in the industry to enhance the predictive power of catastrophe models.

Modeling actuaries can agree that more granular data is always better. But in a time when collecting more and more consumer-level data is becoming increasingly taboo and risky, how can we influence the public to willingly hand over more personal data to insurance companies? Kunst and Williams believe that educating the public is key to ensuring that consumers understand that more accurate catastrophe modeling and pricing is a “win-win-win” for the individual, insurers and society. If we can effectively illustrate to consumers the accuracy of models in predicting insurance needs at the individual home level, then we can articulate that we’ll be able to offer better protection in their times of need. The ultimate effect would be that consumers would see less subsidization, more fair insurance pricing and a far more stable insurance market.

Jourdan Vasapolli, ACAS, is lead actuary at USAA and a guest columnist for AR.