“The term itself is vague, but it is getting at something that is real…Big Data is a tagline for a process that has the potential to transform everything.”—Jon Kleinberg, Cornell University

Big data looms large. For years, we have been told that “data is the new oil”; it’s a management revolution; it’s the next frontier for innovation, competition and productivity. An influential Wired magazine article even declared that big data marks the end of the scientific method as we know it! The claims range from the usefully provocative to the manifestly absurd, leaving business leaders, actuaries and data scientists the task of separating the conceptual signal from the marketing noise.

It is easy to see why the messages have gained traction: Google Translate (the innovation that inspired the Wired article mentioned above) employs brute-force analysis of word associations detected in massive stores of data to, if you wish, translate Polish into Portuguese. The Obama reelection campaign made inspired use of both predictive modeling and behavioral nudge tactics to win what has been called “the first big data election.” Netflix built its House of Cards on a foundation of big data: they observed that a significant subset of hard-core fans of the actor Kevin Spacey and the director David Fincher were also avid viewers of a certain BBC miniseries called House of Cards. So, there really is some signal amidst the rhetorical noise: big data has played a role in putting a dent in the problem of machine translation, helped win a major election and was used to good effect in choosing the creative team for a celebrated television series. There have been many other examples of data-fueled innovation, and there will surely be many more to come.

But while the term does get at something real, it is suffused with two sorts of ambiguity. Big data is typically defined either as data whose very size causes problems for standard data management and analysis tools; or data marked by “the 3 Vs”: volume, velocity and variety (i.e., encompassing such unstructured data sources as free-form text, photographs or recordings of speech). In addition to being inherently fuzzy, such definitions are pegged to the rapidly moving target of computer storage and processing power. This is not to say that “big data” is meaningless or doesn’t exist, only that the border between what qualifies as “big” is inherently fuzzy and likely to change over time.

The second type of ambiguity is semantic. Particularly in the popular and business press, “big data” is increasingly used as a tagline for such applications of business analytics as human resource analytics, predictive modeling for insurance underwriting or claims triage or medical decision support. This leads some of us to (half seriously) propose a more expansive definition of big data as “whatever doesn’t fit into a spreadsheet.” Though somewhat flip, this actually might be the most useful definition; in this conception, “big” is less a matter of size than of analytical complexity. Big data calls for more than the sort of manipulations performed by analysts using spreadsheets. It calls for data science. When used in this colloquial sense, “big data” is analogous to “rocket science”: a harmless bit of metaphorical mental shorthand.

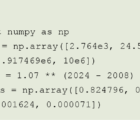

Unfortunately, this semantic ambiguity has engendered confusion that can in turn lead to strategic errors. There is little doubt that both data volumes, as well as instances of data-fueled innovation, are set to grow over time. But it is not clear that framing business analytics discussions in terms of data size or big data technology is particularly helpful. Doing so abets the misconception that big data and the requisite hardware are necessary for analytics projects to result in commensurately big value. This is false for epistemological, methodological and strategic reasons. First, data is simply not the same thing as information. For example, recording each outcome of millions of exchangeable coin tosses conveys no more information for predicting the outcome of the next toss than does recording a mere two numbers: the number of tosses and the number of tosses that landed heads. Analogously, transactional credit data and second-by-second snapshots of telematics driving behavior data would presumably be considered big data sources. But for the purpose of predicting risk-level loss propensities, judiciously crafted predictive variables that summarize large numbers of atomic transactions will typically suffice. The degree to which such data sources are “big” is less interesting than the relevance of the information contained in the derived data features.

A related point is that information is not the same thing as relevant information. For example, millions of tweets from a biased sample might be less useful for a particular inferential task than a small, but judiciously selected, sample of survey responses. Big data and machine-learning discussions tend to focus on mining for patterns in large datasets. But often it is necessary to consider not just the data itself, but the process that generated the data. Loss reserving and pricing actuaries who regularly work with data that reflect historical changes in claims handling practices, pricing plans or mixes of business are keenly aware of this point.

Though fundamental, the point is often neglected even in highly prominent applications. For example, Google Flu Trends made a splash in 2008 with an innovative use of Internet search data to forecast flu outbreaks. But in recent years, the Google algorithm overestimated the number of flu cases by a wide margin. In an article published in Science in March 2014, a group of computational social scientists led by David Lazer diagnosed a key problem: Google periodically tweaks its search engine, resulting in changing distributions in search terms. However, the Flu Trends algorithm was apparently not recalibrated to reflect the changes to the data generation process. The Lazer article diagnoses this episode as an instance of “big data hubris…the often implicit assumption that big data are a substitute for, rather than supplement to, traditional data collection and analysis.” The idea of using Google search data to predict flu outbreaks is certainly ingenious and valuable, but framing the innovation in “big data” terms, with the implicit suggestion that something about the sheer size of the data obviates the need for traditional methodology, is badly misleading.

So, yes, the societal and business trends that the term “big data” points to are both real and important. But as an organizing principle for methodological and strategic discussions, “big data” is of dubious value. Indeed, it abets misconceptions that can lead to serious methodological and strategic errors. I propose that, particularly in the actuarial domain, “behavioral data” would be a more productive organizing principle for that of which we speak. But—saved by the bell—I am out of space. This will be the subject of a future column.

Editor’s note: For more on big data, see “Too Big To Ignore: When Does Big Data Provide Big Value?” in Deloitte Review 12, 2013.